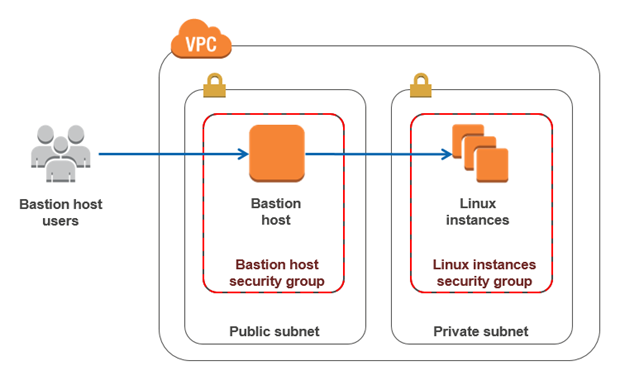

using a bastion host to access a private VPC in AWS

Using Ansible to manage internal VPC private instances without using VPNs, by deploying a SSH proxy bastion host.

background

When dealing with a web stack or AWS infrastructure, how are private instances that do NOT need a public IP address managed? It’s not an extremely difficult question. In many cases VPNs are used for this purpose. But what if a VPN isn’t needed? It’s arguably overkill and it can introduce a lot of overhead, creating multiple site-to-site VPNs and linking various regions together.

And that’s where this playbook comes in. All SSH traffic destined for private instances within the VPC is proxied through a single bastion host. This host acts as both the NAT gateway for internal instances and the SSH proxy for managing/configuring those instances. No VPN needed to the VPC, just pure SSH.

Another fun trick in this playbook is how to capture the SSH fingerprint and the corresponding SSH public key. When connecting to a new instance for the first time, how do you verify the SSH host key? How do you verify not being man-in-the-middle’d? A general way around this for Ansible users has been to disable host key verification. This is a not good idea and should be avoided at all costs. This playbook has a nice little method to get around that…

On to the meat.

the stuffs - preamble

The usual preamble. I build my playbooks as modular as possible; I wrap up everything, AWS secret and access keys included, into a vault file. This way I have one password file or password to protect. Makes it very modular.

I am currently using Ansible 2.1 for this playbook.

playbook overview

This playbook is a bit different than previous playbooks. I used to use a lot of implicit variables with host_vars and group_vars. This layout will load variables explicitly, using vars_files.

The playbook itself does the following:

- Loads variables.

- Create andconfigure the VPC/subnets and gather facts on them.

- Create the bastionhost instance in AWS.

- Configuring routing so that internal instances use the bastion host as a route.

- Create security groups.

- Create IAM role profile(s) (not really used here, but good for future use/changes).

- Create EC2 instances and gather facts on them.

- Configure SSH to use the proxy. Gather the SSH public key through the EC2 console. Copy them into the

known_hostsfile. - Add all EC2 instances from

aws.ec2_factsto different Ansible groups. - Configure the bastionhost.

- Configure the internal instances, demonstrating that there is SSH access into the instances and the bastionhost is acting as the NAT instance.

Now for some of the highlights.

aws.bastionhost

The first few roles create the VPC and gather facts about that VPC. Straightforward, really. The real interesting things happen when the role [aws.bastionhost](https://github.com/bonovoxly/playbook/tree/master/ansible-roles/aws.bastionhost) runs. The bastion host security group is created and tagged, as it needs to exist before the instance is created. The VPC subnet ID is retrieved using the ec2_vpc_subnet_facts module. The bastion host instance is created, with special note that the source/destination check is disabled:

- name: Create bastionhost instance.

ec2:

aws_secret_key: "{{ vault.aws_secret_key }}"

aws_access_key: "{{ vault.aws_access_key }}"

region: "{{ vpc.region }}"

assign_public_ip: "{{ ec2_bastionhost.assign_public_ip }}"

count_tag:

Name: "{{ ec2_bastionhost.instance_tags.Name }}"

exact_count: "{{ ec2_bastionhost.exact_count }}"

group: "{{ ec2_bastionhost.groups }}"

instance_tags: "{{ ec2_bastionhost.instance_tags }}"

image: "{{ vpc.image }}"

instance_type: "{{ ec2_bastionhost.instance_type }}"

keypair: "{{ ec2_bastionhost.keypair }}"

source_dest_check: no

vpc_subnet_id: "{{ vpc_subnet_facts.subnets|map(attribute='id')|list|first }}"

wait: "{{ ec2_bastionhost.wait }}"

register: bastionhost_instance_results

With the bastion host created the settings of that host are now known. The private IP address of the instance can be used to form the internal security group that will allow SSH access from the bastion host to the internal instances:

- name: Create bastionhost SSH inbound rules for internal instances.

ec2_group:

aws_secret_key: "{{ vault.aws_secret_key }}"

aws_access_key: "{{ vault.aws_access_key }}"

region: "{{ vpc.region }}"

description: "{{ securitygroups_bastionhost_internal.tags.Name }} SSH rules."

name: "{{ securitygroups_bastionhost_internal.name }}"

rules:

- proto: tcp

from_port: 22

to_port: 22

cidr_ip: "{{ bastionhost_instance_results.tagged_instances.0.private_ip }}/32"

vpc_id: "{{ vpc_id_fact }}"

register: bastionhost_inbound_internal_results

- name: Tag bastionhost SSH inbound rules for internal instancensn.

ec2_tag:

aws_secret_key: "{{ vault.aws_secret_key }}"

aws_access_key: "{{ vault.aws_access_key }}"

region: "{{ vpc.region }}"

resource: "{{ bastionhost_inbound_internal_results.group_id }}"

state: present

tags: "{{ securitygroups_bastionhost_internal.tags }}"

Finally, the bastion_public_dns_name fact is set. This is used during the localhost.bastion_ssh_config, to configure the ~/.ssh/config file, and is passed into the role as bastion_vars.

aws.routes

This role configures the routing within the VPC. It verifies that there is an Internet gateway for the public subnets…

One funky issue that arises is if there is a black hole route (most likely from a deleted NAT instance), this error will occur:

An exception occurred during task execution. To see the full traceback, use -vvv. The error was: TypeError: argument of type 'NoneType' is not iterable

Fastest way to fix this is to go to your private route and delete the Black Hole entry.

localhost.bastion_ssh_config

The first two tasks are initialization and cleanup. The real work begins when configuring the SSH proxy host. It uses blockinfile, as it seems that config lines in ~/.ssh/config cannot end with comments (while known_hosts can):

- name: Configure SSH proxy host.

blockinfile:

dest: "{{ ansible_env.HOME }}/.ssh/config"

marker: "# {{ marker_vars|default(vpc.resource_tags.Organization) }}"

block: |

Host ip-{{ vpc.cidr_block.split('.')[0]}}-{{ vpc.cidr_block.split('.')[1]}}-*-*.{{ route53.domain }}

ProxyCommand ssh -i ~/.ssh/{{ vpc.keypair }} -W %h:%p -q {{ vpc.image_user }}@{{ bastion_vars }}

ServerAliveInterval 30

Once this completes, the ‘~/.ssh/config’ will have lines similar to this:

# MARKER_EXAMPLE

Host ip-10-148-*-*.compute.internal

ProxyCommand ssh -i ~/.ssh/id_rsa -W %h:%p -q ubuntu@ec2-54-42-101-35.compute-2.amazonaws.com

ServerAliveInterval 30

This frames the SSH config file in a marker, setting the SSH proxy command to tunnel all traffic destined for, say, ip-10-133-1-134.us-west-2.compute.internal through the public facing SSH bastion host. It uses the VPC variables and some splitting to link the VPC network with the proper proxy comand. It does this using a registered host key in AWS (while Secure access to internal resources without a VPN.

localhost.aws_ssh_keys

A problem to overcome is… how to deal with the SSH fingerprint/public key. When using an AMI image, especially Ubuntu sanctioned AMIs in this example, the SSH host key is generated upon boot. How do you know what the fingerprint is and thus accept the public key? Either it has to be blindly accepted or manually checked. That’s what this role takes care of.

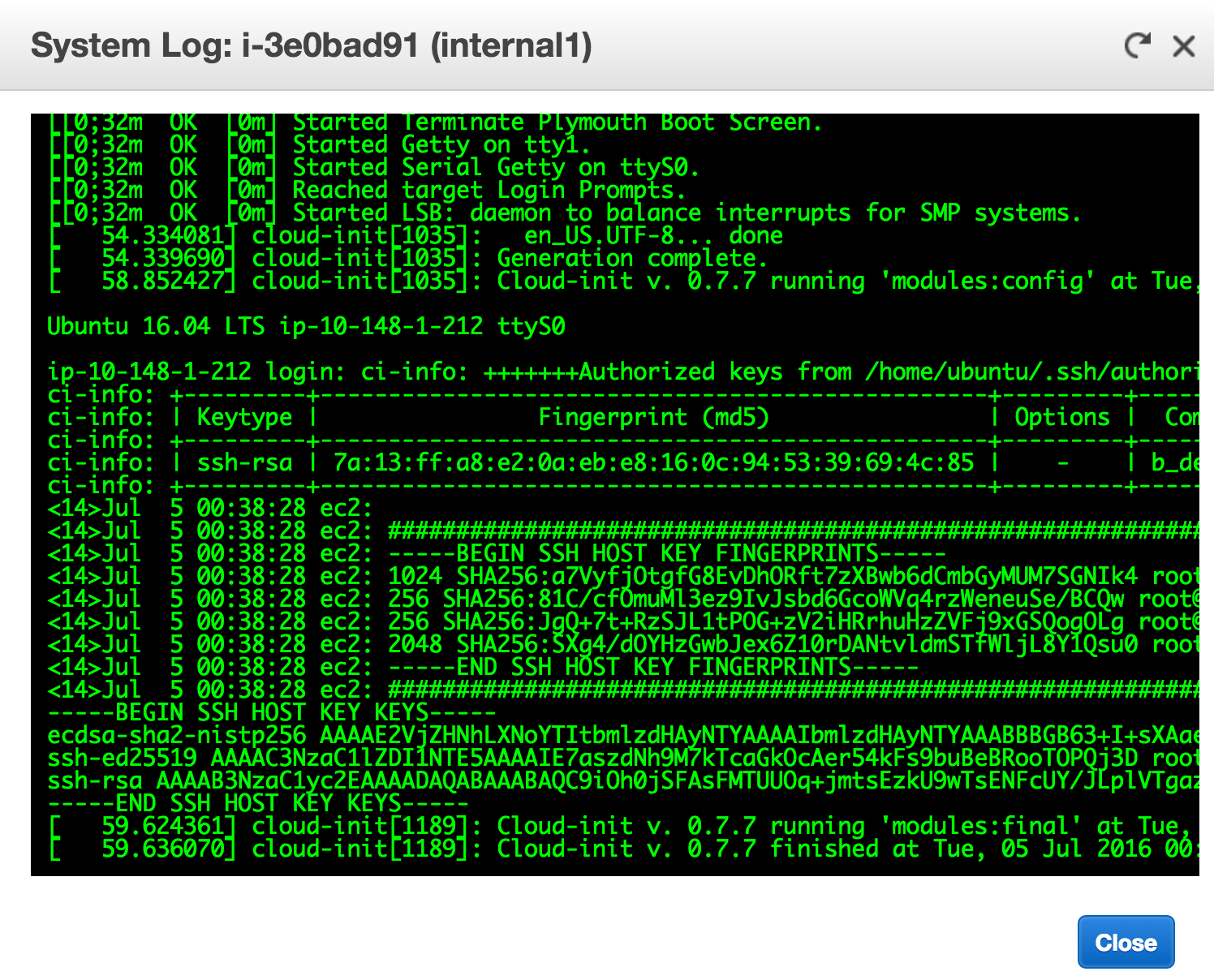

Amazon has this handy feature called Get System Log. When an instance boots up, it actually writes the SSH host key to this output. And it’s available programatically! All that’s needed is some logic to handle the dynamic nature of the AWS infrastructure.

So all that’s needed is to get that data into Ansible (and make sure the SSH public key is echoed to the log after reboot or shutdown…).

First, the old known_hosts entries are cleaned (again, using the same marker from the localhost.bastion_ssh_config role), the real magic happens. AWS CLI has the ability to pull the Get System Log programatically:

- name: Get the public SSH key from the AWS system log.

shell: aws ec2 get-console-output \

--region {{ vpc.region }} \

--instance-id {{ item.id }} \

--output text|sed -n 's/^.*\(ecdsa-sha2-nistp256 \)\(.*\)/\2/p' | awk '{print $1}'

register: host_key_results

with_items: "{{ ec2_facts.instances }}"

until: host_key_results.stdout != ''

retries: 75

environment:

AWS_ACCESS_KEY_ID: "{{ vault.aws_access_key }}"

AWS_SECRET_ACCESS_KEY: "{{ vault.aws_secret_key }}"

This handy command is what grabs the public SSH key and registers it to a variable. Note that the extra awk. One problem with this method is that this data is ONLY printed to system log on first boot. Not too useful since it’s relied upon for every play. It won’t be found if there is a reboot. This is overcome later. On all instances, there is a role that adds a command to /etc/rc.local, which prints the SSH fingerprint to the AWS System Log on boot. A nice way to get around that limitation. :)

[Here’s a slightly deeper dive into the SSH fingerprint/public key problem in AWS.](https://blog.billyc.io/2016/07/05/securely-gathering-ssh-public-keys-from-the-aws-system-log/

Finally, we take the results of that SSH public key gathering and add BOTH the private DNS name and public DNS name, if it has one. Both entries are added to ~/.ssh/known_hosts.

ansible.groups_init

The Ansible module, add_host is handy for dynamic inventories. It adds hosts to any group. This role has a few pre-configured tags that it will add hosts to, such as an instance’s Role:

- name: Add instances to their private 'Role' group.

add_host:

groups: "{{ item.tags.Role }}_private"

hostname: "{{ item.private_dns_name }}"

with_items:

- "{{ ec2_facts.instances|selectattr('state', 'equalto', 'running')|list }}"

when: item.tags.Role is defined

So any instance with the internal_system will be configured via the private DNS address when internal_system_private is used as the host group in a playbook.

ubuntu.raw_install_python

In this case, using the official Ubuntu AMI results in no Python being installed. Which is not very good when using Ansible. So this role is run to use the Ansible raw module to install all necessary Ansible prerequesites.

instance.ssh_aws_public_key

This role gets around the issue of the SSH public key not being in the AWS System Log on reboot. By hooking /etc/rc.local, the public key is available every boot.

- name: Add the public SSH key to AWS System Log.

lineinfile:

dest: /etc/rc.local

insertbefore: 'exit 0'

line: '/usr/bin/ssh-keygen -y -f /etc/ssh/ssh_host_ecdsa_key'

bastionhost.nat_config

This role configures the bastion host to act as a NAT interface, allowing outbound Internet access to all internal instances. It does this through installing and configuring iptables and enable IP forwarding for the instance via sysctl.

summary

A playbook that deploys an AWS VPC, deploys a bastion host and internal instances, gathers the SSH public keys in a secure fashion and configures them, providing SSH access to the remote host without the need of VPN. This playbook can be extended to deploy an entire stack, everything from additional instance roles, to an ELB, to an Amazon RDS database. It’s just a start over what I found to be a weird problem.

-b

links

- https://github.com/bonovoxly/playbook/tree/masters

- AWS Security Post on Bastion Hosts

- Ansible add_host module

- SSH fingerprints from the AWS System Log

some notes on errors

If you’re working through this playbook, and you see an error similar to:

r.gateway_id not in propagating_vgw_ids]\nTypeError: argument of type 'NoneType' is not iterable\n", "module_stdout": "", "msg": "MODULE FAILURE", "parsed": false}

it means you’ve got a black hole route in AWS and Ansible is choking on it. This usually happens if the default gateway instance has been deleted, with the route still using it. Simply remove the route in AWS and things should work.

future improvements

A few things worth mentioning on how this can be improved.

- Create a dedicated security group that allows SSH access from the bastionhost. Instead of unioning the rules, multiple security groups are applied to the instance.

- Break out the bastion host configuration from the SSH fingerprint/public key configuration.

- Add a section for uploading/modifying the AWS SSH key.

(

(