securely gathering SSH public keys from the AWS System Log

A spin off from Ansible SSH bastion host for dynamic infrastructure in AWS, this post documents how to gather EC2 instance SSH public key from the AWS System Log.

background

For a while I was stumped at how to deal with AWS, AMIs and SSH fingerprints/public keys. Initially, it was through pre-baked AMIs. But what if pre-baking AMIs isn’t realistic? Or the AMIs are baked without SSH host keys generated and aren’t known?

This very issue popped up here while working at PokitDok.

So that’s the rub. The SSH public key must be known and verified in order for there to be no risk of being man-in-the-middle’d (at least as much as can be helped). How to do that…

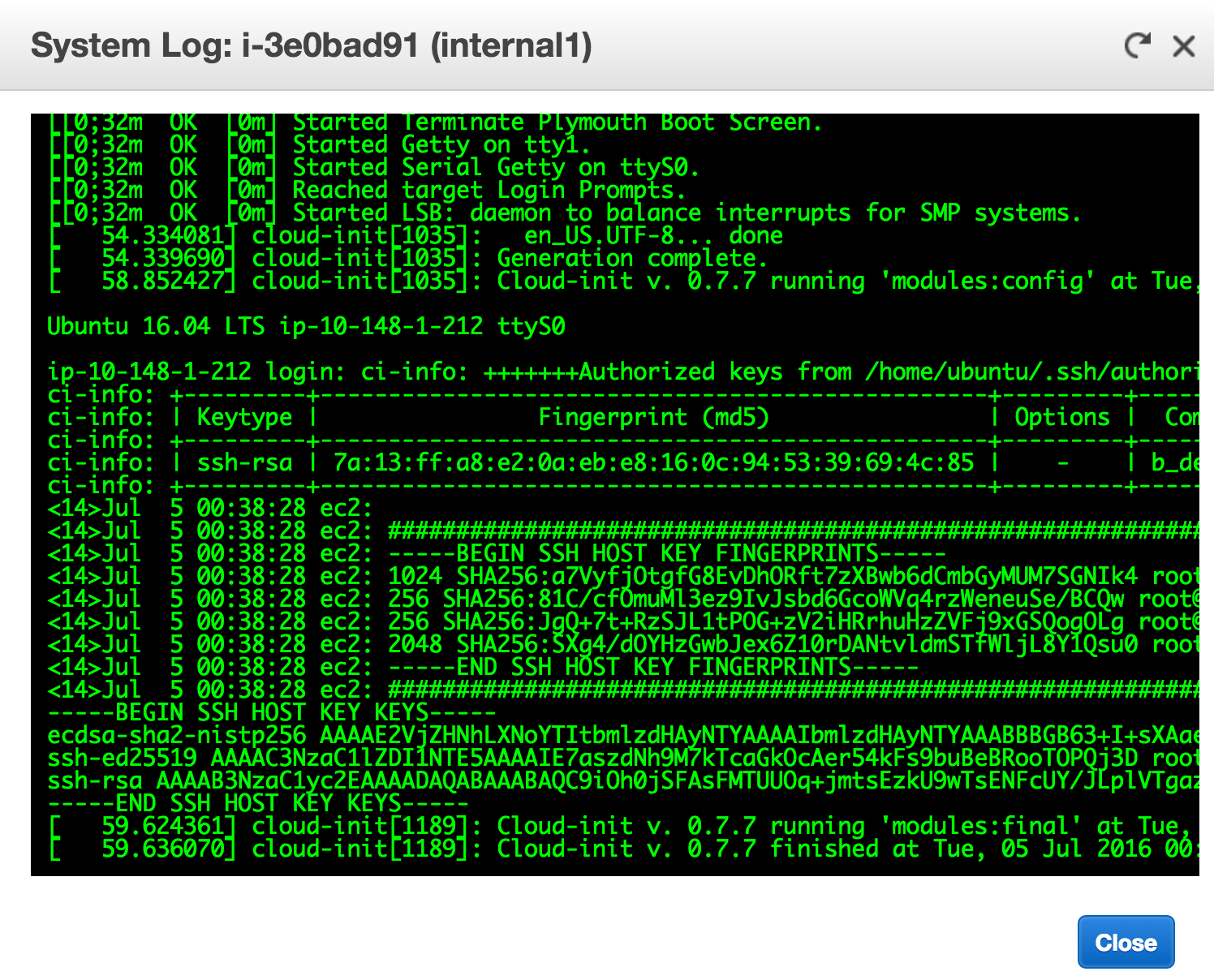

Cue the Amazon feature Get System Log. When an instance boots up, it actually writes the SSH host key to this output. And it’s available programatically via aws ec2 get-console-output. All that’s needed is some logic to handle the dynamic nature of the AWS infrastructure. By using this trusted SSL interface, the SSH public key can be collected and local SSH configurations can be updated.

Now to get that data.

the localhost.aws_ssh_keys role

The core of this role starts with scrubbing the ~/.ssh/known_hosts file. All tagged entries (using the commented marker_vars) are removed. Once scrubbed, the entries are re-added. Each playbook. This is done to ensure that OLD entries/instances that are no longer valid get removed:

- name: Remove all old known_hosts entries.

lineinfile:

dest: "{{ ansible_env.HOME }}/.ssh/known_hosts"

regexp: "^.* # {{ marker_vars|default(env) }}"

state: absent

Once removed, they are added using the AWS CLI tool, pulling the Get System Log programatically:

# note - AWS access and secret keys need to be defined via environment variables

- name: Add SSH public key to AWS System Log - this is BEST EFFORT. May miss the SSH public key.

shell: aws ec2 get-console-output \

--region {{ vpc.region }} \

--instance-id {{ item.id }} \

--output text | sed -n 's/^.*\(ecdsa-sha2-nistp256 \)\(.*\)/\2/p' | tail -n 1 | awk '{print $1}' | awk '{print substr($0,1,140)}'

register: host_key_results

with_items: "{{ ec2_facts.instances }}"

This handy task repeatedly attempts to get the line from the AWS system log that contains ecdsa-sha2-nistp256, which has the public key data. sed carves up the output and grabs only that public key. It does this for ever host retreived from ec2_facts.instances and registers the output to host_key_results. ec2_facts.instances are the results of the aws.ec2_facts role, a role which collects EC2 facts using a specific filter. It has a slew of data relating to all instances within our VPC. This task uses that result as the with_items list, so the task retieves the System Log for every instance found in that fact.

NOTE - this role USED to wait for instances that hadn’t started up yet (like newly created ones), using the handy new until feature in Ansible. However, it was frustrating if it was unable to find a key (blank System Log or another unconfigured instance that matched the filter). This new task is now a “best effort”. If it doesn’t find the key, it doesn’t find it. Again, this is due to the unreliability of the AWS Get System Log. Sometimes, this data never appears. I’ve seen this once or twice, usually when deploying a lot of instances at once… The way forward was to terminate the instance and redeploy (it was the initial boot, so still an unconfigured instance). :sadpanda:

Charging forward…. The playbook takes the host_key_results public key gathering and add BOTH the private DNS name and public DNS name, if it has one. Both entries are added to ~/.ssh/known_hosts.

- name: Import SSH public keys - private IP addresses.

lineinfile:

dest: "{{ ansible_env.HOME }}/.ssh/known_hosts"

line: "{{ item.0.private_dns_name }},{{ item.0.private_ip_address }} ecdsa-sha2-nistp256 {{ item.1.stdout }} # {{ marker_vars|default(vpc.resource_tags.Organization) }}"

when: item.0.state == 'running'

with_together:

- "{{ ec2_facts.instances }}"

- "{{ host_key_results.results }}"

- name: Import SSH public keys - public IP addresses.

lineinfile:

dest: "{{ ansible_env.HOME }}/.ssh/known_hosts"

line: "{{ item.0.public_dns_name}} ecdsa-sha2-nistp256 {{ item.1.stdout }} # {{ marker_vars|default(vpc.resource_tags.Organization) }}"

when: item.0.state == 'running' and item.0.public_dns_name != ''

with_together:

- "{{ ec2_facts.instances }}"

- "{{ host_key_results.results }}"

with_together is very handy for parallel lists. Since the host_key_results output is derived from the list output of ec2_facts.instances, they are 1:1 lists. The host keys are mapped to the instance private and public DNS names. All SSH public keys are accounted for.

Once this completes, the ~/.ssh/known_hosts will have lines similar to this:

ip-10-148-1-134.us-west-2.compute.internal,10.148.1.134 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBBrl8XPTWLeHH7817PPVXOM53LlIbHxdsa5Ms21BNK2JY9WOwiEOcK1Xm+wGXpmop1l28nZX90CgkZQMAmTZeIU= # MARKER_EXAMPLE

ip-10-148-1-67.us-west-2.compute.internal,10.148.1.67 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBHPW+eCoLBGbS7TLUz6d4gukL4UxOElH++azqwC5DCvRGhp+vL+446d1OXU4AvJWQMLKsW3GVarPch73Lgi/qRM= # MARKER_EXAMPLE

The markers provide the way of modifying ONLY the entries that are relevant and avoid stomping on other entries. It also allows cleaning of the file as in a dynamic inventory, some instances/IP addresses may no longer exist.

instance.ssh_aws_public_key

There is a minor catch to grabbing the AWS System Log; this data is not available in the AWS System Log on reboot or after a shutdown. It is only printed once, at the initial boot. That’s not good; if a reboot occurs, if a shutdown and startup occurs, if an AWS infrastructure occurs, the playbook won’t work. How should this be solved?

The AWS System Log prints everything during the initial boot sequence. So the trick is, get the SSH public key to always print during the initial boot. A very quick and seemingly durable way to do this is is to add a command to the script /etc/rc.local, using the instance.ssh_aws_public_key role:

- name: Add SSH public key to AWS System Log.

lineinfile:

dest: /etc/rc.local

insertbefore: 'exit 0'

line: '/usr/bin/ssh-keygen -y -f /etc/ssh/ssh_host_ecdsa_key'

Unfortunately, I’ve also seen problems with this. I think (haven’t fully investigated) there’s a potential race condition between when the rc.local script is run and AWS stops monitoring boot output. So, there’s a second task to ensure that the System Log has the SSH public key written to it:

- name: Add SSH public key to cloud-init output as well.

copy:

src: ssh-to-system-log.sh

dest: /var/lib/cloud/scripts/per-boot/ssh-to-system-log.sh

mode: 0755

These steps help ensures that each reboot, the key is available and captured by the playbook.

summary

Hopefully others find this little trick useful. Accessing the AWS System Log really should be it’s own module, I find it that handy. There’s a level of infosec comfort in knowing that the SSH keys have been verified and are trustworthy. It also feeds into the dynamic nature of AWS and the instances; the SSH keys don’t need to be known, they don’t need to be added ahead of time or blindly trusted on initial connection.

-b