deploying kubernetes 1.7.3 using Terraform and Ansible

what and why

In a previous post, I walked through an infrastructure deployment of a Kubernetes stack to AWS.. I have come back to it a few times, attempting to clean up the documentation, clean up the process, and improving the accessibility of the overall project. This time, I wanted to modernize it to match the current major release of Kubernetes. But there were other reasons and by modernizing this, it would allow me to explore some interesting topics like NGINX ingress controllers, Prometheus, OAuth integration, Istio, Helm, and Kubernetes Operators.

This blog post will cover the deployment of Kubernetes 1.7 to AWS. It walks through the following:

- Using Terraform, create the AWS infrastructure, including the VPC, subnets, routing, AWS NAT gateway, and OpenVPN instance.

- Using Ansible, deploy and configure that OpenVPN instance.

- Using Terraform, create the Kubernetes infrastructure.

- Using Ansible, deploy and configure the CFSSL instance.

- Using Ansible, deploy and configure the Kubernetes cluster, including Weave Net and kube-dns Kubernetes daemonsets/deployments.

I tried to break up the steps this time, to help support different environments and simplify things. Already have a VPC infrastructure? It’s possible to tweak the Kubernetes Terraform settings and variables to shoe-horn it into that existing infrastructure.

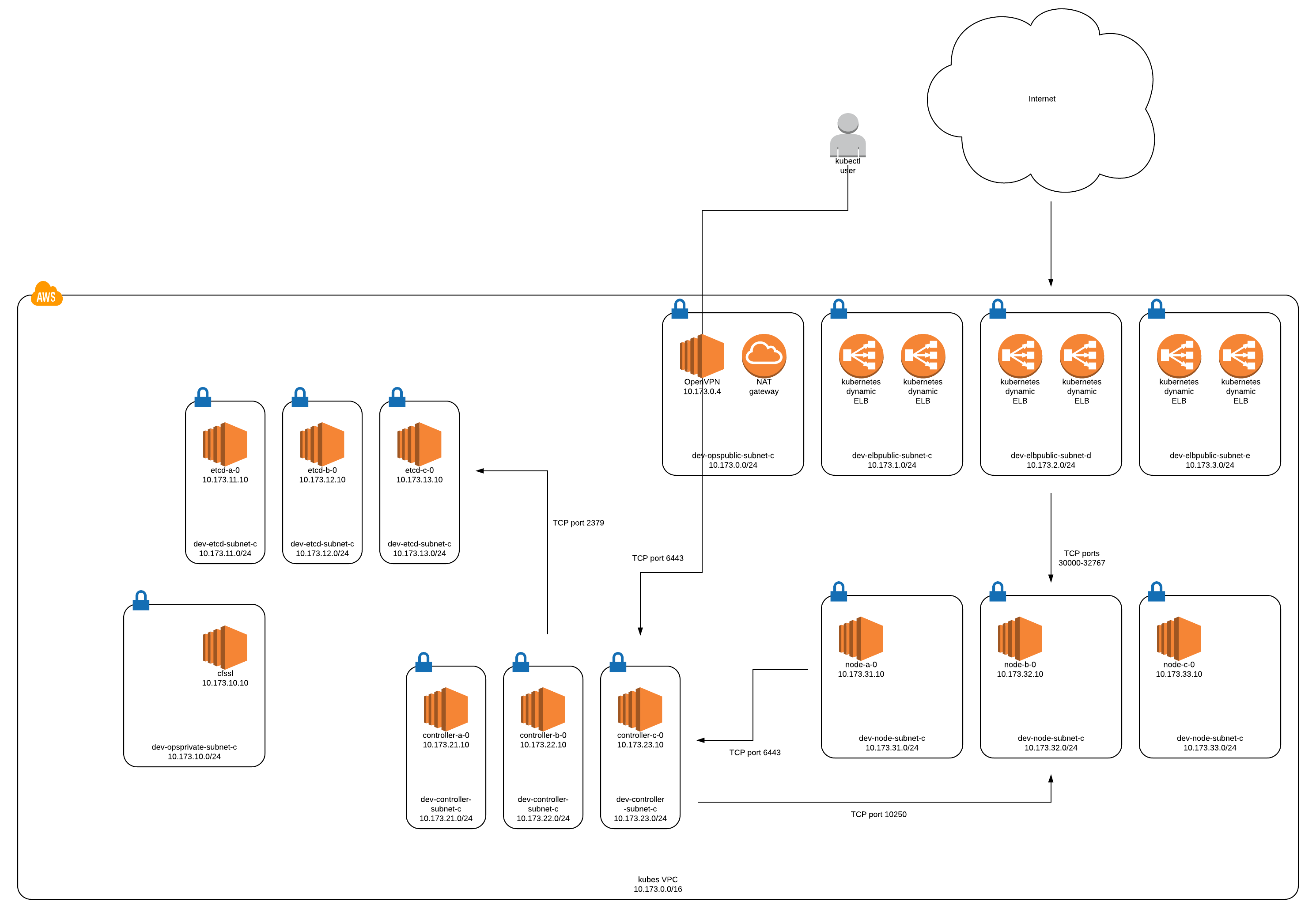

In the end, the Kubernetes infrastructure will look like this:

To deploy it, the following Github repositories are used:

- the Terraform repo - https://github.com/bonovoxly/terraforms

- the Ansible repo - https://github.com/bonovoxly/playbook

The following steps bounce back and forth between repos.

important variables and settings of note

There are a few configurations, settings, and defaults that are important to note. For Terraform, there are two variables files that are relevent (the AWS and Kubernetes variables):

dns- the private DNS zone for Route53. Used for internal DNS resolution.env- a unique identifier, meant to represent the environment.cidr- the first two octets of the VPC CIDR.keypair- the AWS SSH keypair to use. Needs to exist in AWS first.kubernetes_cluster_id- the Kubernetes cluster ID. It’s a tag that is used by Kubernetes to identify the subnet to use for ELB creation.region- the AWS region.

Make sure both match. It might be simplier to symlink this in the future…

Ansible will require matching settings. These can be found in the inventory file:

dns- should match the Terraform private DNS zone.env- should match the Terraform unique identifier.cidr- modify the IP addresses of each group to match the specified CIDR address. Default should match (10.173).kubernetes_cluster_id- The Kubernetes cluster_name.region- the AWS region.

Some other general notes:

- This Kubernetes deployment uses weave-net as the CNI plugin. Weave by default uses a CIDR of

10.32.0.0/12, which is10.32.0.0-10.47.255.255. - Weave is deployed with a randomly generated password to encrypt communication between nodes.

- The Kubernetes settings can be found here. These control settings ranging from Kubernetes cluster DNS domain, to Docker versions, to service cluster IP ranges.

dev-aws-vpc Terraform

The first step, if there is no AWS VPC, is to deploy the infrastructure.. As the README.md notes, this deploys a VPC, subnets, routes, an AWS managed route gateway, a private Route53 DNS zone, and an OpenVPN instance. Modify the variables.tf accordingly. To deploy the infrastructure:

git clone https://github.com/bonovoxly/terraforms # if you haven't cloned the repo

cd terraforms/dev-kubernetes-1.7

terraform init # if terraform > 0.10

terraform plan

terraform apply

deploying and configuring the OpenVPN instance

Once the Terraform is complete, gather the Elastic IP that was associated with the OpenVPN instance. Edit the Ansible inventory file (inventory/dev-kubernetes-1.7/inventory) and modify the openvpn-public group (change the ELASTICIPHERE), setting it to the newly created Elastic IP address.

It is recommended to scrape the AWS system log to get the public IP address (post about it here):

git clone https://github.com/bonovoxly/playbook # if you haven't cloned the repo

cd playbook

ansible-playbook localhost_ssh_scan.yml -e region=us-east-1

Now to configure the OpenVPN instance (in this example it is a split tunnel VPN, tunneling only traffic to the 10.173 network through the VPN):

git clone https://github.com/bonovoxly/playbook # if you haven't cloned the repo

cd playbook

ansible-playbook -i inventory/dev-kubernetes-1.7 openvpn.yml

Import the OpenVPN profile (it is copied to the Desktop). Connect using any OpenVPN software (such as Tunnelblick for OS X, Ubuntu supports OpenVPN natively).

dev-kubernetes-1.7 Terraform

Back to Terraform in order to deploy all the components required to run Kubernetes in AWS. As the README.md describes, this Terraform project deploys:

- a CFSSL instance.

- three etcd instances for the etcd cluster.

- three Kubernetes controller instances for the Kubernetes controller cluster.

- three Kubernetes nodes (kubelets).

- EFS storage (NFS backend if needed).

It configures a few less obvious things such as Route53 records, IAM policies, security groups, etc.

To deploy:

terraform init # if terraform > 0.10

terraform plan

terraform apply

deploying and configuring CFSSL

CFSSL takes care of the TLS certificate generation for the various components of Kubernetes. This playbook configures CFSSL to run in a Docker container on a dedicated CFSSL instance. This serves as an internal PKI server (poor man’s Hashicorp Vault).

Again, it is recommended to scrap the AWS system log to get the public SSH keys:

git clone https://github.com/bonovoxly/playbook # if you haven't cloned the repo

cd playbook

ansible-playbook localhost_ssh_scan.yml -e region=us-east-1

Then, deploy CFSSL:

git clone https://github.com/bonovoxly/playbook # if you haven't cloned the repo

cd playbook

ansible-playbook -i inventory/dev-kubernetes-1.7/inventory cfssl_deploy.yml

deploying and configuring the Kubernetes cluster using Ansible

The meat of it all, the deployment of etcd, Kubernetes control plane, the Kubernetes kubelets, and the Kubernetes deployments of Weave-Net and Kube-DNS.

git clone https://github.com/bonovoxly/playbook # if you haven't cloned the repo

cd playbook

ansible-playbook -i inventory/dev-kubernetes-1.7/inventory kubernetes-1.7_deploy.yml

Once finished, the kubectl config file (serviceaccount.kubeconfig) is saved in root’s home directory on the Kubernetes controller instances. Copy this to your local system (~/.kube/config) and test accordingly.

conclusion

There it is, Kubernetes 1.7.3, with some extras like OpenVPN and CFSSL. It has everything needed to get started with Kubernetes.

links

- AWS/VPC Terraform - https://github.com/bonovoxly/terraforms/tree/master/dev-aws-vpc

- Kubernetes 1.7 Terraform - https://github.com/bonovoxly/terraforms/tree/master/dev-kubernetes-1.7

- Ansible playbooks - https://github.com/bonovoxly/playbook